RashCam

@Comsats University IslamabadRashCam is mainly a smart dash-cam equipped with state-of-the-art yet cheap and easily available sensors. This device can detect bad driving behaviors like speeding, aggressive driving & hard cornering. And notify the vehicle owner regarding these events along with video clip from the dashboard of the vehicle.

Overview

RashCam was our final year project for Bachelor’s degree in Computer Science at COMSATS University Islamabad.

This project consists of three main components:

- RashCam Device (IoT Hardware)

- Raspberry PI 4

- Raspberry PI Camera

- IMU Sensor (9DOF)

- GPS Sensor

- Device Software

- Python

- Linux

- GStreamer

- WebRTC

- Mender (OTA Updates)

- Web Application

- Client App (Next.js)

- Backend Server (Django)

- Signaling Server

- TURN Server (coturn)

- PostgreSQL Database

- Redis Cache/Queue

- Celery Workers

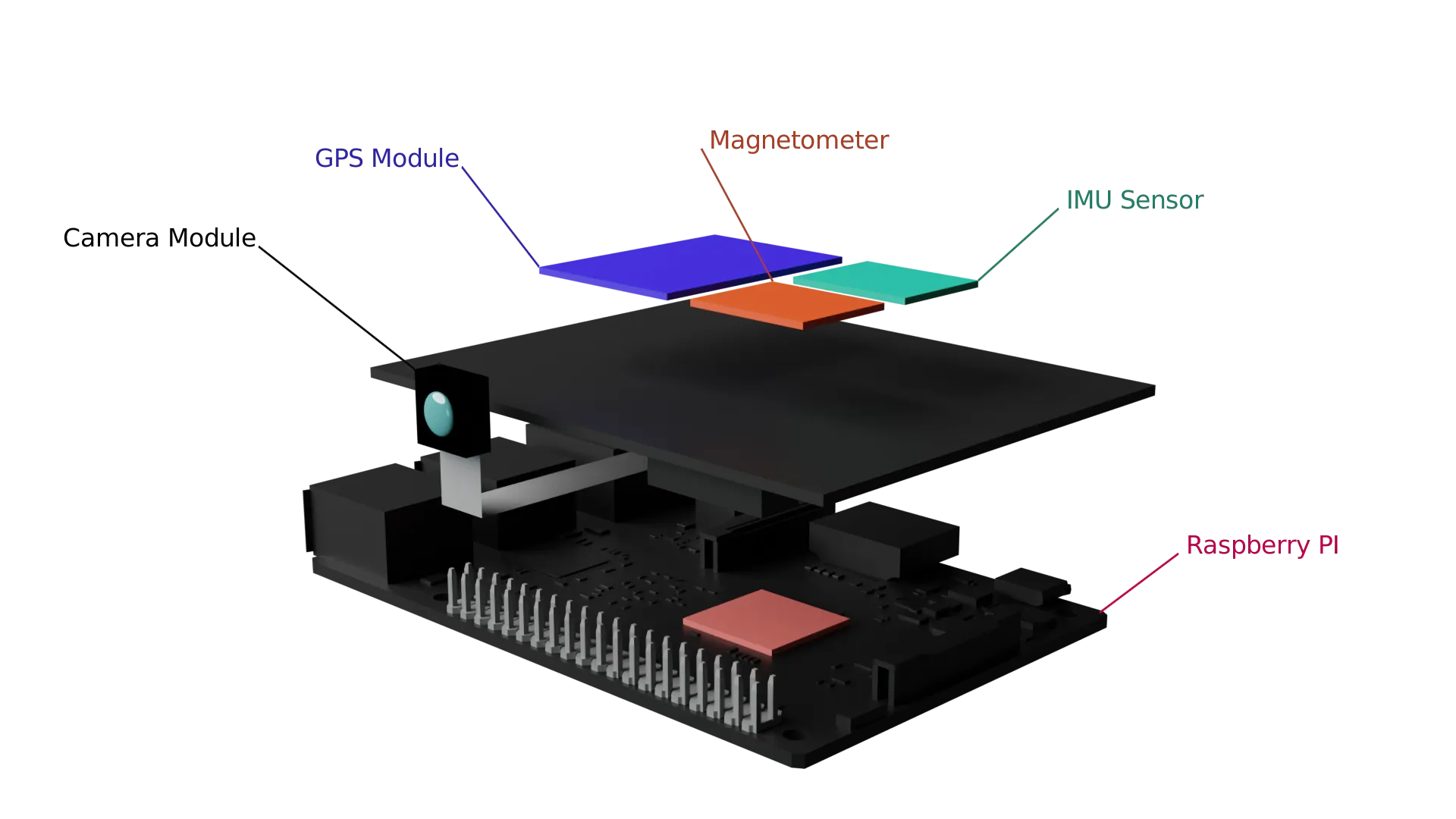

Rashcam Device

-

Raspberry PI 4 is the main brain of the device. It is a single board computer with 4GB of RAM and 64bit ARM processor. It runs Raspberry PI OS which is a Debian based Linux distribution.

-

Raspberry PI Camera is used to capture video from the dashboard of the vehicle. It is connected to Raspberry PI via CSI interface.

-

IMU Sensor - MPU 9250 is used to detect the orientation, acceleration (both linear and angular) and magnetic field of the device. It is connected to Raspberry PI via I2C interface.

-

GPS Sensor - NEO 6M is used to detect the location of the device. It is connected to Raspberry PI via UART interface.

For some reason our Magnetic sensor from IMU was not working properly, so we had to use a compass module instead.

Device Software

The software stack of the device is divided into two parts:

- Firmware - The operating system and drivers for the device and all the supporting libraries.

- Application - The main python application which runs on top of the firmware.

Firmware:

OS: We used Raspberry PI OS as our base operating system. It is a Debian based Linux distribution. We tried to use fedora IoT, but it was not stable enough for our use case.

Video Streaming: We used GStreamer to stream video from the camera. GStreamer is a pipeline based multimedia framework. It is used to create complex multimedia pipelines for video streaming, video recording, video encoding, video decoding, video transcoding, etc.

OTA Updates: We used Mender to manage OTA updates for our device. Mender is an open source OTA update manager for embedded Linux devices. Which helped us to update our device software remotely.

Application:

We picked Python as the main language for our app because it’s easy to use and flexible. This was super helpful, especially when dealing with a tricky and unclear problem. It let us quickly try out and change our ideas as we worked on the project. GStreamer Python Bindings helped us in creating GStreamer pipelines in python.

The nature of the application leads us to implement a complete event driven architecture to handle all the events from different sensors and video streams and process them accordingly.

The above diagram shows the flow of events from different sources to different sinks. Before we dive into the details of each component, let’s first define some terms:

- Source is an event producer.

- Sink is where those events get dumped after some processing.

- Bin is a special element that can be a source and a sink at the same time.

- Operators / Filters are the intermediate operations happening on the events. They can skip, combine or map events.

Now with these definitions in mind here is the architecture:

- GPS Source produces GPS events once the satellite connection is established. These events contain the GPS coordinates.

- A Distinct Filter is applied on these events which only let pass events with at-least contains amount of change. For example if the vehicle is parked it won’t throw multiple events with same coordinates.

- Next a Throttling Filter is applied which throttles the number of events per second.

- Finally, the events are pushed to Websocket Sink, which is connected to the server via websocket.

- IMU Sensors gives the readings of accelerometer, gyroscope and magnetometer across 3 axis x, y and z.

- Compass filter takes 3 magnetometer axis and returns a 2d compass direction. That direction is sent than sent to the WebRTC Bin.

- IMU Sensors data is then passed to Rolling Filter. This rolling filter takes n values before emitting a new event with those n values. And, when it received (n+1)th value it emits 2nd to (n+1) values, discarding the first value.

- These n values are passed to Classifier which classifies the events within this window of values. NOTE: the real implementation of Rolling filter is a bit different to avoid duplicate classification of same event.

- When the classifier detects an event it signals the Split Chunk Recorder.

- We take raw frames Video Source and pass them to Video Encoder.

- Now this encoded video is live-streamed on demand to via WebRtc Bin. A WebRTC bin is constructed and added to the pipeline for each new connection.

- The encoded video is also passed to Split Chunk Recorder that saves the video in chunks of 10s, This also writes in loop so when have 10 different chunks it overwrites the first one.

This is the whole architecture of our RashCam device but, I would like to mention one more piece of the puzzle, when the Classifier signals the Split Chunk Recorder, we need the 30-second video to be uploaded to the server. We archive this by following algo:

- Copy previous 10 seconds chunk, wait for current and a future chunk to completed and copy them too.

- If there isn’t another event for next 3 chunks (30s), merge 3 copied chunks into one video and upload it to the server.

- Else repeat the step one until we have no event for next 30s.

It seems very simple in words but believe me that was one of the hardest thing we solved in this whole project.

Web Application

The purpose of the application is to provide a dashboard for the user to view the live stream from the device, view the detected incidents and view the recorded videos. The web app also provides a way to configure the device and view the device status.

RashCam device uses TURN server for WebRTC communication, websocket for signaling and RestAPI for posting data like detected incidents/video chunks to server.

The backend server is responsible for providing the REST APIs for the web app and the device. It also provides the signaling server and the TURN server for the WebRTC connections.

The backend was build using a number of technologies:

-

At the core of everything we have Django Web Server. It uses Django rest framework to provide Rest APIs and Django Channels for WebSocket connections.

-

A TURN server which is just the coturn instance deployed.

-

PostgreSQL database to stores the users authentication information, devices info and detected trips and incidents.

-

Redis is being used as a cache provider for backend server, and it also provides messaging queue between django and celery workers.

-

Celery workers are being used to off load background tasks from web server.

On frontend side, we have NextJS that serves the web app. Our web app is quite simple with 3 to 4 routes other than the authentication pages. Web app communicates with backend via REST APIs.

Conclusion

The nature of this project was very different from the kind of projects we were conformable developing but, again this was one of the reasons we choose this project. We wanted to learn new things and we did. We faced a lot of issues during the development of this project. Some of them were easy to solve and some of them were really hard. But, we managed to solve them all.

We learned a lot of new things during the development of this project:

- About the GPS, IMU sensors, WebRTC, WebSockets, Django Channels, Celery, Docker, CI/CD etc.

- To work in a team and how to manage a project.

- To divide a project into small tasks and how to assign those tasks to team members.

- To communicate with each other and how to help each other.

- To manage our time and how to meet deadlines.

- To work under pressure and how to solve problems.

- To debug and how to find solutions to problems.

- To write clean code.

- To write documentation and how to present our work.

We are really thankful to our supervisor Dr. Usama Ijaz Bajwa for his guidance and support throughout the project.

About Team

- Ameer Hamza Naveed: worked on a wide range of technologies from hardware to OS, detection algorithm and DevOps including docker & CI/CD Pipelines.

- Nauman Umer: mostly managed the web technologies including Django, NextJS and WebRTC.

- Dr. Usama Ijaz Bajwa: our supervisor and mentor for this project.